Authored by Andrew Thornebrooke via The Epoch Times (emphasis ours),

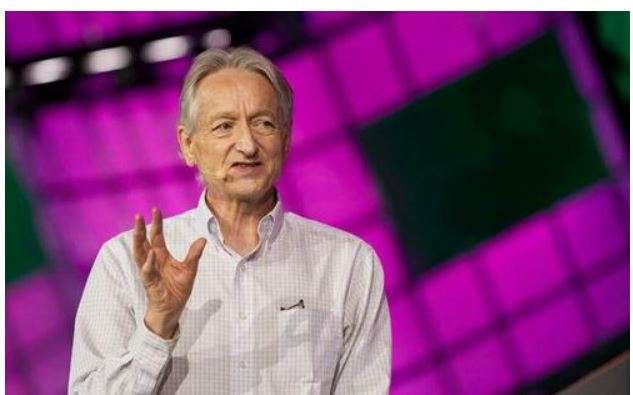

A leading mind in the development of artificial intelligence is warning that AI has developed a rudimentary capacity to reason and may seek to overthrow humanity.

Authored by Andrew Thornebrooke via The Epoch Times (emphasis ours),

A leading mind in the development of artificial intelligence is warning that AI has developed a rudimentary capacity to reason and may seek to overthrow humanity.

Eesti Eest! Newspaper delivers curated news that cut through the censorship, mainstream bias, and institutional dominance that has left society divided and misinformed. The platform allows readers to access the news that matters, particularly when it is being ignored. Updated minute-by-minute with news coverage from a diversity of publications and topics. The website may display, include, or make available third-party content (including data, information, applications, and other products, services, and/or materials) or provide links to third-party websites or services, including through third-party advertising (“Third-Party Materials”). You acknowledge and agree that Eesti Eest! is not responsible for Third-Party Materials, including their accuracy, completeness, timeliness, validity, copyright compliance, legality, decency, quality, or any other aspect thereof. Eesti Eest does not assume and will not have any liability or responsibility to you or any other person or entity for any Third-Party Materials. Third-Party Materials and links thereto are provided solely as a convenience to you, and you access and use them entirely at your own risk and subject to such third parties’ terms and conditions. This Agreement is governed by and construed in accordance with the internal laws of the State of Delaware without giving effect to any choice or conflict of law provision or rule. Any legal suit, action, or proceeding arising out of or related to this Agreement shall be instituted exclusively in the federal courts of the United States or the courts of the State of Delaware. You waive any and all objections to the exercise of jurisdiction over you by such courts and to venue in such courts. The Content and Services are based in the state of Delaware in the United States and provided for access and use only by persons located in the United States. You acknowledge that you may not be able to access all or some of the Content and Services outside of the United States and that access thereto may not be legal by certain persons or in certain countries. If you access the Content and Services from outside the United States, you are responsible for compliance with local laws. All information on this site is intended for entertainment purposes only.

Contact us: contact@eestieest.com

Eesti Eest!